Build hands-free web experiences with real-world JavaScript examples that listen, understand, and act.

Introduction

Imagine this:

You’re building a dashboard or a to-do app, and instead of clicking buttons, you just say what you want: “Add a new task,” “Switch to dark mode,” or “Show analytics.”

That’s all possible right now with the SpeechRecognition API, no frameworks, no server setup, no external AI service.

In this post, we’ll go beyond theory and walk through real, working examples of how to use SpeechRecognition to build voice-driven features in your JavaScript apps.

By the end, you’ll have a working toolkit of ideas you can plug directly into your own projects.

1. Quick Setup: One Line That Changes Everything

The SpeechRecognition API (part of the Web Speech API) lets browsers transcribe voice input into text.

Here’s the basic setup you’ll use in every example:

const SpeechRecognition = window.SpeechRecognition || window.webkitSpeechRecognition;

const recognition = new SpeechRecognition();

recognition.lang = 'en-US';

recognition.interimResults = false;

Start listening:

recognition.start();

When the user speaks, the API emits an event containing the transcribed text:

recognition.onresult = (event) => {

console.log(event.results[0][0].transcript);

};

That’s it, your browser can now understand speech.

2. Example 1: Voice-Powered Dark Mode Toggle

Let’s start simple: a command that toggles light and dark themes.

<button id="start">🎤 Activate Voice</button>

<p id="output">Say "Dark mode" or "Light mode"</p>

<script>

const SpeechRecognition = window.SpeechRecognition || window.webkitSpeechRecognition;

const recognition = new SpeechRecognition();

recognition.lang = 'en-US';

const output = document.getElementById('output');

const start = document.getElementById('start');

start.addEventListener('click', () => {

recognition.start();

output.textContent = 'Listening...';

});

recognition.onresult = (e) => {

const command = e.results[0][0].transcript.toLowerCase();

output.textContent = `You said: ${command}`;

if (command.includes('dark')) {

document.body.style.background = '#111';

document.body.style.color = '#fff';

} else if (command.includes('light')) {

document.body.style.background = '#fff';

document.body.style.color = '#000';

}

};

</script>

Say “dark mode” and your app instantly changes theme.

A simple way to make your UI accessible and futuristic.

3. Example 2: Voice Search Box

Perfect for dashboards, product listings, or knowledge bases.

<input id="searchBox" placeholder="Say your search..." />

<button id="mic">🎙️</button>

<script>

const recognition = new (window.SpeechRecognition || window.webkitSpeechRecognition)();

recognition.lang = 'en-US';

const input = document.getElementById('searchBox');

const mic = document.getElementById('mic');

mic.addEventListener('click', () => {

recognition.start();

});

recognition.onresult = (e) => {

const text = e.results[0][0].transcript;

input.value = text;

console.log('Searching for:', text);

};

</script>

Now your users can just say what they’re searching for, no typing required.

Pro Tip: You can trigger an actual search request automatically inside the onresult handler.

4. Example 3: Voice-Activated Scrolling

A fun, practical demo perfect for long documentation pages.

recognition.onresult = (event) => {

const text = event.results[0][0].transcript.toLowerCase();

if (text.includes('scroll down')) {

window.scrollBy(0, 400);

} else if (text.includes('scroll up')) {

window.scrollBy(0, -400);

}

};

Now just say “Scroll down” or “Scroll up” and watch the page respond.

You can even add speed variation, for example, “Scroll faster” could move by 1000px.

5. Example 4: Dictation Mode for Textareas

Let users fill out text fields with their voice, great for chat apps or forms.

<textarea id="note" placeholder="Speak your message..."></textarea>

<button id="start">🎤 Start Dictation</button>

<script>

const recognition = new (window.SpeechRecognition || window.webkitSpeechRecognition)();

recognition.lang = 'en-US';

recognition.continuous = false;

const note = document.getElementById('note');

const start = document.getElementById('start');

start.addEventListener('click', () => recognition.start());

recognition.onresult = (e) => {

const text = e.results[0][0].transcript;

note.value += text + ' ';

};

</script>

This can be a lifesaver for accessibility or hands-free input on touchscreen devices.

6. Example 5: A Simple Voice Assistant (Listen + Respond)

Now let’s make the browser talk back using the SpeechSynthesis API.

function speak(text) {

const utterance = new SpeechSynthesisUtterance(text);

speechSynthesis.speak(utterance);

}

recognition.onresult = (event) => {

const command = event.results[0][0].transcript.toLowerCase();

if (command.includes('hello')) {

speak('Hello there!');

} else if (command.includes('time')) {

const now = new Date();

speak(`The time is ${now.getHours()} ${now.getMinutes()}`);

} else {

speak(`You said ${command}`);

}

};

Now you have a mini voice assistant that listens, understands, and replies all in the browser.

7. Example 6: Multi-Language Voice Recognition

Add instant multilingual support for global apps.

recognition.lang = 'fr-FR'; // French

You can even create a dropdown for users to switch languages dynamically:

<select id="lang">

<option value="en-US">English</option>

<option value="es-ES">Spanish</option>

<option value="fr-FR">French</option>

</select>

<script>

const recognition = new (window.SpeechRecognition || window.webkitSpeechRecognition)();

document.getElementById('lang').addEventListener('change', (e) => {

recognition.lang = e.target.value;

});

</script>

8. Example 7: Continuous Listening (Like a Real Assistant)

By default, SpeechRecognition stops after one phrase.

You can make it restart automatically for hands-free interaction:

recognition.continuous = true;

recognition.onend = () => recognition.start();

recognition.start();

Now your app keeps listening indefinitely, perfect for dashboards or kiosks.

Just remember to provide a Stop button for user control.

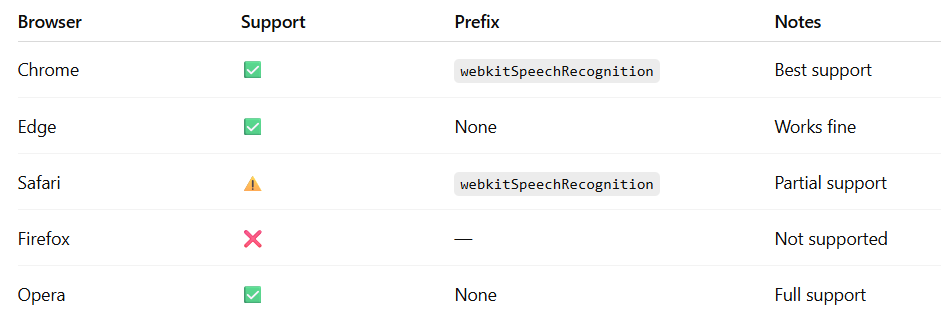

9. Browser Support Summary

And yes, it must run over HTTPS for microphone access.

10. Real-World Use Cases

- Voice search in e-commerce or dashboards

- Hands-free navigation for accessibility

- Form filling for medical, logistics, or field apps

- Voice-controlled smart home dashboards

- Voice chat interfaces for interactive apps

Developers are using SpeechRecognition to make the web more accessible and more natural, and the best part is, it’s all native JavaScript.

Conclusion

The SpeechRecognition API is one of the most underrated tools in the web platform.

With just a few lines of code, you can make your browser listen, understand, and act instantly, locally, and securely.

Whether you’re building an assistant, a search interface, or a smarter form, voice control can make your app faster and more human.

Pro Tip: Combine SpeechRecognition with the BroadcastChannel API to sync voice commands across tabs for multi-view web apps.

Call to Action

Which of these examples would you try first?

Share your favorite voice command idea in the comments and bookmark this post to use it in your next JavaScript project.

Leave a Reply